GreyLattice-01(GL01): What is Computational Neuroscience

Dive into “GreyLattice-01” to explore Computational Neuroscience and understand the role it plays in Neurotechnology

Index

(1) What is the current state of Computational Neuroscience?

(2) Potential Research Areas and Problems Being Solved Today

(i) Neural Coding and Information Processing

(ii) Synaptic Plasticity and Learning

(iii) Neural Dynamics

(iv) Brain-Machine Interfaces

(v) Neuromorphic Computing

Even though it sounds quite modern, the term “Computational Neuroscience” was introduced in 1985 by a professor named Eric. L. Schwartz at a conference in Carmel. California.

It was an attempt to summarize/unify a lot of different research, ideas, and or concepts that included in some way or the other the use of electronics, mathematics, and computers (of the time) to understand the brain or to research the brain and find new information about its working and or processes. Examples of such research are neural modelling (the process of creating mathematical and computational models to understand how the brain and nervous system function), brain theory(theories on the brain and how it works), neural networks (old AI stuff that led to ChatGPT today), and more.

The conference aimed to bring together leading researchers to discuss and define the emerging field, addressing the computational problems associated with understanding the brain’s structure at multiple levels — from synaptic (point or junction at which electrical signals move from one neuron to another) to system (overall) levels.

The conference covered a range of topics, including the bio-physical (studying how neurons function at molecular and cellular levels) properties of neurons, neural network simulations, and the neurobiological significance of learning models.

But this event alone was not responsible for the solidification of Computational Neuroscience as a subject. Two more events contributed to the acknowledgment of this subject by the research community.

(1) The first annual international meetings focused on computational neuroscience — was organized by James M. Bower and John Miller in 1989. This meeting and subsequent meetings further solidified the field’s academic and research foundations and continue to be a crucial platform for sharing advancements and fostering collaboration among computational neuroscientists. (https://www.cnsorg.org/)

(2) The first Doctoral program focusing explicitly on computational neuroscience was established as the “Computational and Neural Systems” Ph.D. program at the California Institute of Technology (Caltech) in 1985. Acknowledging the field and also paving the way for training interdisciplinary scientists who wished to specialize in this domain.

These three major events laid the foundation for the development and growth of Computational Neuroscience, leading to major breakthroughs in understanding the brain and using this knowledge for different applications.

Note: I shall dedicate another blog talking about research and other works conducted on this subject before its foundations on the 1980s , like a precursor to the history blog.

(1) What is the current state of Computational Neuroscience?

Computational Neuroscience is now defined as a field that combines multiple disciplines/interdisciplinary

Which disciplines? -> Mathematics, Neuroscience, Computer Science, Physics, Engineering and more,

To do what? -> To “computationally” understand the mechanisms and principles of the entire nervous system( including the brain, spinal cord, and more),

Why ? -> To develop theoretical and computational models that can capture and predict the dynamics of neural systems and explain how neural circuits process information, generate behaviours and contribute to cognitive functions by integrating data from experimental neuroscience with computational approaches.

So what is the overall goal?

Simply put we want to simulate or replicate the entire brain computationally. This can lead to answers to questions such as Sentience, the origin of consciousness, understanding and curing brain diseases, curing cancer, “do some cool cyberpunk 2077 mind hacking” and maybe even prove Darwin's theory of evolution and more. This is only possible when we develop theoretical models of the brain which then can be applied computationally to produce interesting discoveries and results.

I predict that this subject will have a major breakthrough that will change the world, similar to what OpenAI has done with Artificial Intelligence as a whole.

Neuroscience and computational techniques have a dynamic relationship where each field propels the other forward. Neuroscientific discoveries spark new computational methods, while computational advances enable ground-breaking neuroscience experiments and theories. This ongoing synergy drives continuous evolution in both fields.

Neural networks, a cornerstone of modern AI, have their roots in our understanding of the brain’s neural processes. These networks have revolutionized technology, giving rise to incredible innovations in areas like machine learning and natural language processing. As AI develops, it provides new tools for neuroscientists, allowing us to delve deeper into the brain’s mysteries.

This mutual advancement means that as we create more sophisticated AI, we also uncover new insights into how our brains work. It’s a continuous feedback loop: neuroscience informs computational techniques, and those techniques, in turn, enhance our understanding of the brain. This dynamic interaction is why computational neuroscience is always evolving and why it’s such an exciting field to watch.

So, What are the “Interesting” areas of research and problems being solved right now?

(2) Potential Research Areas and problems being solved today

(i) Neural Coding and Information processing

Before I explain that, Some context first:

First and foremost, What are neurons/nerve cells you may ask? Well, it is the fundamental cellular unit of any nervous system of all “Animals”.

(No, Plants do not have neuronal networks or brains; Exception -> Trichoplax and sponges are the only animals with no neurons).

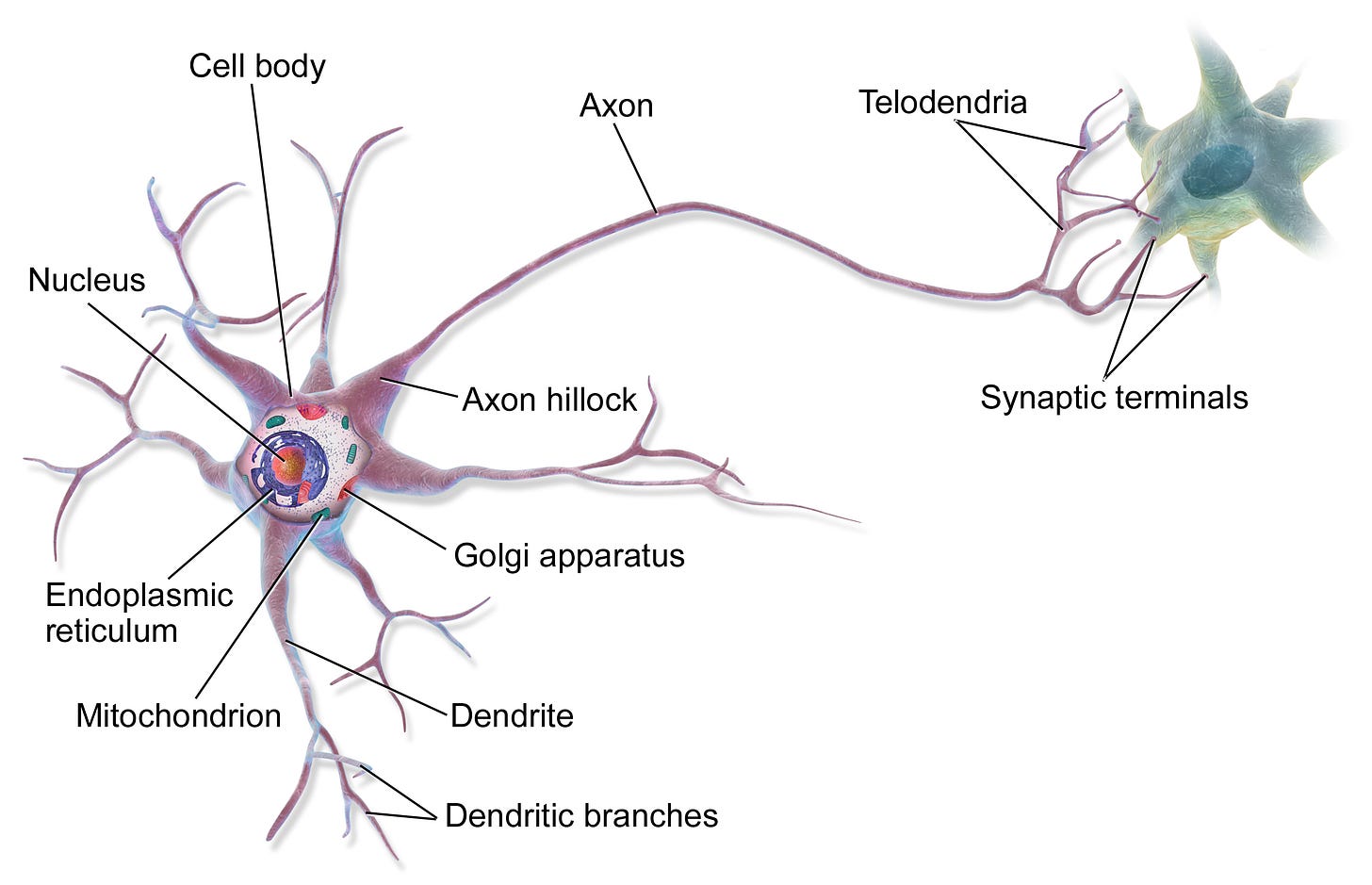

A Neuron looks like the “diagram below”.

It’s essentially what the “nerves” in the “Nervous system are made of”, and what the Grey and White Matter of the brain consists of (Excuse the simplification neuroscience majors). So without it, we wouldn’t be intelligent beings or have any sort of thinking capabilities that we humans have today.

What do they do? Simply put, they are designed to transmit information by sending and receiving electrical signals between one another (This process is called nerve impulses or action potential or spikes).

So how do electrical signals store data and information and how much can they store?

Well, it's estimated that the brain can actually store about 2.5 Petabytes of memory (1 Petabyte is literally a million gigabytes, and I don’t remember what I had for lunch today), and this information (Simplified) is stored as “Patterns”.

Patterns -> meaning patterns of the flow of electric charges between neurons. Each and every neuron is connected to one another in a network of networks (like the internet), containing an infinite number of pathways, and each pathway the bio-chemical charges can take (essentially unique pathways) results in a different memory. So when you learn something new and store it in memory you are creating a different pathway in the brain to store that piece of information (not voluntarily, of course, the brain does it for you).

So for example if there are three points to which neurons are connected to -> A,B,C then ABC is one pathway that translates to information X (for example) and CAB is another that translates to information Y.

Each neuron can form thousands of synaptic connections with other neurons, creating an incredibly dense and complex network. The specific patterns of neural activity and the plasticity (Simply put the ability of the nervous system to change the structure, functions, and or connections of neurons) of these connections allow the brain to store an immense amount of information in a highly efficient manner.

After all this context, what is Neural Encoding?

It is basically the research on how neurons encode and transmit information by understanding the patterns of electrical activity in neurons and how these patterns represent sensory information, motor commands, and cognitive states. As described above.

Computational models help decode signals (neural activity received using advanced technology) and reveal how information is processed in the brain. Understanding the workings of these advanced technologies is currently beyond me, but I will eventually understand them and make sure to write about them in a simple manner for my readers. And that kind of is the basic definition of neural encoding as a research topic.

(ii) Synaptic Plasticity and Learning

So how are these electrical signals passed from one neuron to another? It is done through a synapse between 2 neurons, which is essentially a junction or a gateway connecting the “axon” of the neuron (the long sausage-like portion of the neuron that can be seen in the middle) sending the signal and the “dendrite” (part of the head like portion of the neuron you can see in the diagram(left of the axon)) of the other neuron receiving the signal.

And the junction looks somewhat like the above diagram, the small particle-like structures are actually not particles at all, but are “Chemicals” named “neurotransmitters”, that are responsible for transferring electric charge from one neuron to another.

The process of how and why they transmit the charge is an advanced topic currently beyond my understanding. I shall research upon it further before I write about it.

So what is synaptic plasticity and why is it being researched as a part of this subject?

It refers to the ability of synapses, the connections between neurons, to change their strength. This adaptability is fundamental to learning and memory, allowing the brain to store and retrieve information effectively.

You may have had questions somewhat similar to,

How do we memorize something and remember it for longer and vice-versa why do we forget certain things? Considering now you know that every memory is technically a pattern of the flow of electric charges between the neurons, you must question the probability that the pattern will happen again or electric charges will flow in the same way.

How is it a probability?

Because not all synaptic connections are not the same, some are strong and some of them weak. The strong synaptic connections are the ones that are reinforced/stimulated frequently by the environment, ourselves, or other factors, and the weaker ones are vice-versa.

For example, when listening to a song repeatedly, after some period of time, you can replay the song in your head without any issues and remember different parts of the song and its features.

Doesn’t matter if it's a song you like or don’t like, the emotion of liking the song and not liking it just increases the probability of remembering it faster or slower(meaning emotion in some sense, speeds up the process of strengthening).

The effects of emotions on the brain and its functioning is another topic to be discusses another time.

Where does computational neuroscience play a role?

Researchers use computational models to simulate synaptic plasticity mechanisms and electrophysiology ( The EEG test— the study of electrical properties of biological cells and tissues within the nervous system) and imaging studies to observe these changes in living neurons. These methods help in understanding how synaptic changes support memory formation and learning.

Use Cases?

→ Simulating LTP (Long Term Potentiation) and LTD (Long Term Depression) (processes that strengthen the connection between two neurons and the other that weakens the connection respectively),

→ Spike-Timing-Dependent Plasticity (STDP) Models, Each action potential can be called a spike and STDP is a mechanism where the timing of spikes between neurons determines whether the synaptic connection is strengthened or weakened.

→ Hebbian Learning Algorithms, are based on the principle often summarized as “cells that fire together, wire together.” It’s a theory explaining how synaptic connections between neurons strengthen through repeated and persistent stimulation.

These examples and theories will be further explained in detail in other blogs

(iii) Neural Dynamics

We understand to a certain extent how information is transmitted, how information is stored and more, but how do these networks of neurons come together to generate such complex behaviours and and cognitive functions? These are the questions that the study of Neural Dynamics seeks to answer.

Researchers examine the dynamic properties of neural circuits and their roles in perception, decision-making, motor control, and other brain functions. It also involves studying the time-dependent changes in neural activity and how these changes underpin various brain functions. Disruption in these dynamics leads to brain disorders, therefore understanding Neural Dynamics is crucial to possibly find a cure for fatal brain disorders and diseases.

But, How is this research done “computationally”?

To research this subject further, researchers require models of networks of neurons that can be simulated and tested under various conditions. These complex models are simulated using advanced computing systems, using which the researchers can create and test out theories on various concepts and try to solve problems that are present within the field.

Use Cases

→ Modelling Neural Activity: Computational neuroscience aims to create accurate models that simulate the dynamic behaviour of neurons and neural circuits. These models often use differential equations and other mathematical tools to describe how neuronal states change over time in response to stimuli and interactions with other neurons.

→ Insights into Neurological Disorders: Analysing neural dynamics can provide insights into the mechanisms underlying neurological disorders. By modelling the disruptions in neural dynamics associated with conditions like epilepsy, Parkinson’s disease, or schizophrenia, researchers can better understand these disorders and develop targeted interventions

→ Experimental Validation: Computational neuroscience provides a framework to test hypotheses about neural dynamics. Researchers use experimental data from techniques like multi-electrode arrays and calcium imaging to validate and refine their computational models. These experiments help in understanding the real-time dynamics of neural circuits and in confirming theoretical predictions made by the models.

→ Understanding Complex Behaviours: Neural dynamics seeks to understand how the collective behaviour of neurons leads to complex brain functions. By simulating neural dynamics, computational models help researchers predict how changes at the neuronal level can influence overall brain activity and behaviour. For example, models can simulate how networks of neurons generate rhythmic activities or how they synchronize to produce coherent behaviours.

So computational neuroscience is essential for neural dynamics as seen from these use cases.

Do all these topics sound similar?

It’s because they are closely related to one another and play a role in each other's research in some form or another. Granted they all deal with working on neurons and their structures each of the three topics does have different focuses/foci.

In the case of Neural Coding, the focus is on → Understanding how neurons encode and transmit information.

In the case of Synaptic/Neural Plasticity → How changes in synaptic strength contribute to learning and memory.

and finally, in the case of Neural Dynamics → How networks of neurons interact over time to produce complex behaviours.

While each area has a distinct focus, they are interconnected. Advances in one area can inform and enhance research in others, creating a comprehensive understanding of brain function through computational neuroscience.

The last two topics would focus on more implemented technological applications with active research being conducted to scale them

(iv) Brain Machine Interfaces

Have you heard of NeuraLink yet? Neuralink, founded by Elon Musk, is at the forefront of BMI research and development. The company aims to create high-bandwidth, minimally invasive (granted the first chip had to be inserted after surgery on the brain) neural interfaces to connect humans and computers directly. Yup, cool chips in your brain that let you control computational systems in the environment. We are close to the cyberpunk reality, which is cool and scary at the same time.

I will write in detail about Neuralink and its current status in another separate blog post of it own, as Neuralink deserves it.

So what are brain-machine interfaces?

Brain-machine interfaces (BMIs) are at the frontier of neuroscience and technology, combining ideas and research applications of computational neuroscience, biomedical engineering, and machine learning. These systems enable direct communication between the brain and external devices, opening possibilities for restoring or augmenting sensory and motor functions, and even for new forms of human-computer interaction.

How does a machine communicate with our brain?

Our brain for any decision made or action taken releases action potentials or spikes as you have seen before in this blog. These signals are responsible for any form of control, decision, or action from the nervous system to either itself or any other part of the body of an animal.

The job of a BMI is to sense and decode these neural signals from the brain and translate them into commands that can control external devices or anything else that can be controlled by any form of signal an electric signal can be translated into. This interaction is bidirectional, the BMI can also provide sensory feedback to the brain, creating a closed-loop system that mimics natural sensory-motor processes (how you move your hands and legs and know and feel that you are moving them).

How do they work?

Key Components of BMIs

Signal Acquisition:

Techniques: Electroencephalography (EEG), intracortical electrode arrays, and electrocorticography (ECoG) are commonly used to record neural activity.

Purpose: These techniques capture the brain’s electrical activity, which forms the raw data for BMIs.

2. Signal Processing:

Methods: Advanced algorithms filter and pre-process the neural signals to extract meaningful features.

Focus: Noise reduction, artifact removal, and feature extraction to ensure high-quality data for decoding.

3. Decoding Algorithms:

Machine Learning: Decoding algorithms, often based on machine learning, translate neural signals into commands. Techniques include supervised learning, reinforcement learning, and deep learning.

Role in BMIs: These algorithms learn to interpret the patterns of neural activity associated with specific thoughts, movements, or intentions.

4. Device Control:

Applications: BMIs can control a variety of devices, from robotic arms and prosthetics to computer cursors and wheelchairs.

Functionality: The decoded signals are used to generate real-time commands for these devices, enabling users to perform tasks with their thoughts.

5. Sensory Feedback:

Implementation: Sensory feedback systems deliver information back to the brain, often through stimulation techniques like electrical or haptic feedback.

Importance: This feedback helps the brain adapt and refine control, creating a more intuitive and effective interaction with the external device.

And that in some sense is the basics of the working of a brain-machine interface.

(v) Neuromorphic Computing

Another revolutionary technology resulting from computational neuroscience research, taking neuroscience concepts and applying them to microprocessor technologies and creating something entirely new.

It’s an exciting and rapidly evolving field that draws inspiration from the structure and function of the human brain to develop new computing architectures. This innovative approach aims to overcome the limitations of traditional computing systems by mimicking the neural processes found in biological systems.

What if the design of software and hardware systems emulated neural architectures and the operation of the brain? Unlike conventional computers that use the von Neumann architecture, neuromorphic systems are based on parallel, distributed processing, which is similar to how neurons and synapses work in the brain.

Key Concepts in Neuromorphic Computing

For the AI peeps out there, this might be an exciting one.

→ Spiking Neural Networks (SNNs):

Anyone who is learning or at least has a vague idea about machine learning or artificial intelligence knows what neural networks are. A neural network is a method in artificial intelligence that teaches computers to process data in a way that is inspired by the human brain. It uses interconnected nodes or neurons in a layered structure that resembles the human brain.

It creates an adaptive system that computers use to learn from their mistakes and improve continuously. Thus, artificial neural networks attempt to solve complicated problems, like summarizing documents or recognizing faces, with greater accuracy. Many types of neural networks exist such as CNNs, RNNs, and more that are responsible for a majority of AI use cases and applications today.

Neurons and Layers: Traditional neural networks (like feedforward neural networks, convolutional neural networks, and recurrent neural networks) consist of layers of interconnected neurons. Each neuron receives input, processes it using a weighted sum, applies an activation function, and passes the result to the next layer.

Continuous Values: The neurons in these networks operate using continuous values (real numbers). Inputs and outputs of neurons are typically real-valued and can take any value within a specified range, depending on the activation function used (e.g., sigmoid, ReLU).

→ So what is an SNN and how is it truly different?

Biological Inspiration: SNNs are designed to mimic the way neurons communicate through spikes or action potentials. Unlike traditional artificial neural networks that use continuous values, SNNs process information in discrete spikes, making them more energy-efficient and closer to biological neural processing.

Event-Driven Processing: SNNs process information in an event-driven manner. Computation occurs only when spikes are generated, leading to potentially lower energy consumption compared to traditional neural networks.

Spike Timing: Information is encoded in the timing of spikes. This can involve complex temporal patterns where the precise timing of spikes conveys significant information (temporal coding).

Spike-Timing-Dependent Plasticity (STDP): SNNs often use STDP, a learning rule where the timing of spikes influences synaptic strength changes. This is inspired by biological processes and differs from the gradient-based learning in traditional networks.

→ Neuromorphic Hardware:

Imagine a world where Microprocessors don’t just compute — they think and learn like the human brain. This is the amazing research being done in the area of neuromorphic hardware, where engineers and scientists are designing systems that emulate the brain’s unique capabilities. Here’s how they are doing it:

Neuromorphic hardware merges the best of both analog and digital worlds. Just like our brains operate with continuous signals (analog) and discrete spikes (digital), these systems combine:

Analog Components: These replicate the continuous, nuanced behaviours of biological neurons. Think of it as capturing the fluidity of how neurons vary their responses.

Digital Components: These provide the precision and efficiency of digital technology, enabling robust connectivity and integration, much like how synaptic connections work in our brains.

You would have heard of transistors , but have you heard of memristors ?

Just like how transistors are the building blocks of our modern day processors , memristors are the building blocks of neuromorphic circuits. They have a few cool properties:

Adaptive Resistance: They change their resistance based on past electrical activity, mirroring how synapses strengthen or weaken over time — which mimics the second process talked about in this blog, “Synaptic Plasticity”

Data Storage and Processing: Unlike traditional memory elements, memristors can store information and process it simultaneously, much like the brain’s neurons, making neuromorphic systems faster and more energy efficient!

Neuromorphic hardware isn’t just an incremental step; it’s a revolutionary leap toward creating machines that can think, learn, and adapt in real time, just like us. The integration of analog-digital hybrids and memristors paves the way for:

Ultra-Efficient Computing: Imagine computers that are not only faster but also incredibly energy-efficient, capable of processing vast amounts of data with minimal power consumption.

Real-Time Adaptation: Devices that can learn from their environment and experiences, making them smarter and more responsive over time.

So what is the status of its research today?

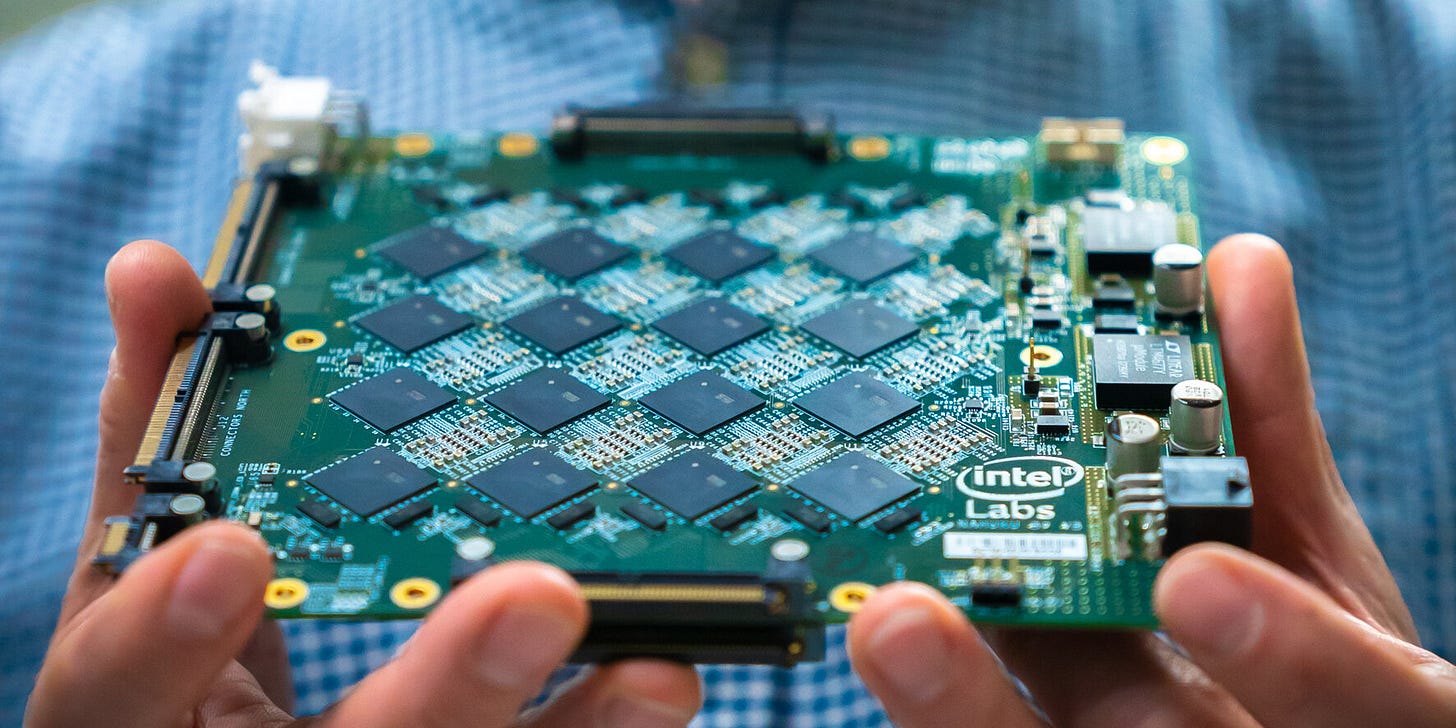

Intel and IBM have both made major strides in the research of neuromorphic computing with Stanford University also contributing to the research.

Based on preliminary research I have found Intel’s research, especially the Loihi chip to be an extremely exciting development in the field.

Intel’s Loihi chip represents a major advancement in neuromorphic hardware. The chip supports complex neuromorphic algorithms and demonstrates the potential for real-time learning and adaptation in AI applications. Do check out their website on their current research :

Neuromorphic Computing and Engineering with AI | Intel®

Discover how neuromorphic computing solutions represent the next wave of AI capabilities. See what neuromorphic chips…www.intel.com

And that's all I currently have to say about Neuromorphic Computing and the major research areas in Computational Neuroscience.

FYI, The proceedings of the first conference on computational neuroscience was published as a book named “Computational Neuroscience” in 1990, written and edited by Eric.L.Schwartz himself.

Google Scholar Profile of Eric.L.Schwartz -> Profile Link

And you can still buy the book online even today! You can find it here : — Book link and get it on amazon here : — Buy the book

You have reached the end of the article(You are truly amazing); Mentioned below are the sources of information that I used to research and write my article.

Thank you for the read, I hope this blog sparks some interest in the subject for anyone and everyone out there. The goal is to have a lot more people investing their time, money and energy into research and development in Neurotechnology, leading to the development of the worlds greatest companies in the near future.

Sources:

(2) https://mitpress.mit.edu/9780262691642/computational-neuroscience/

(3)https://www.bionity.com/en/encyclopedia/Computational_neuroscience.html

(4) https://aws.amazon.com/what-is/neural-network/

(5) https://www.intel.com/content/www/us/en/research/neuromorphic-computing.html

(6) AI assistance — grammar correction and readability enhancement, finding information sources, General research, Picture Generation

(7) Google Search